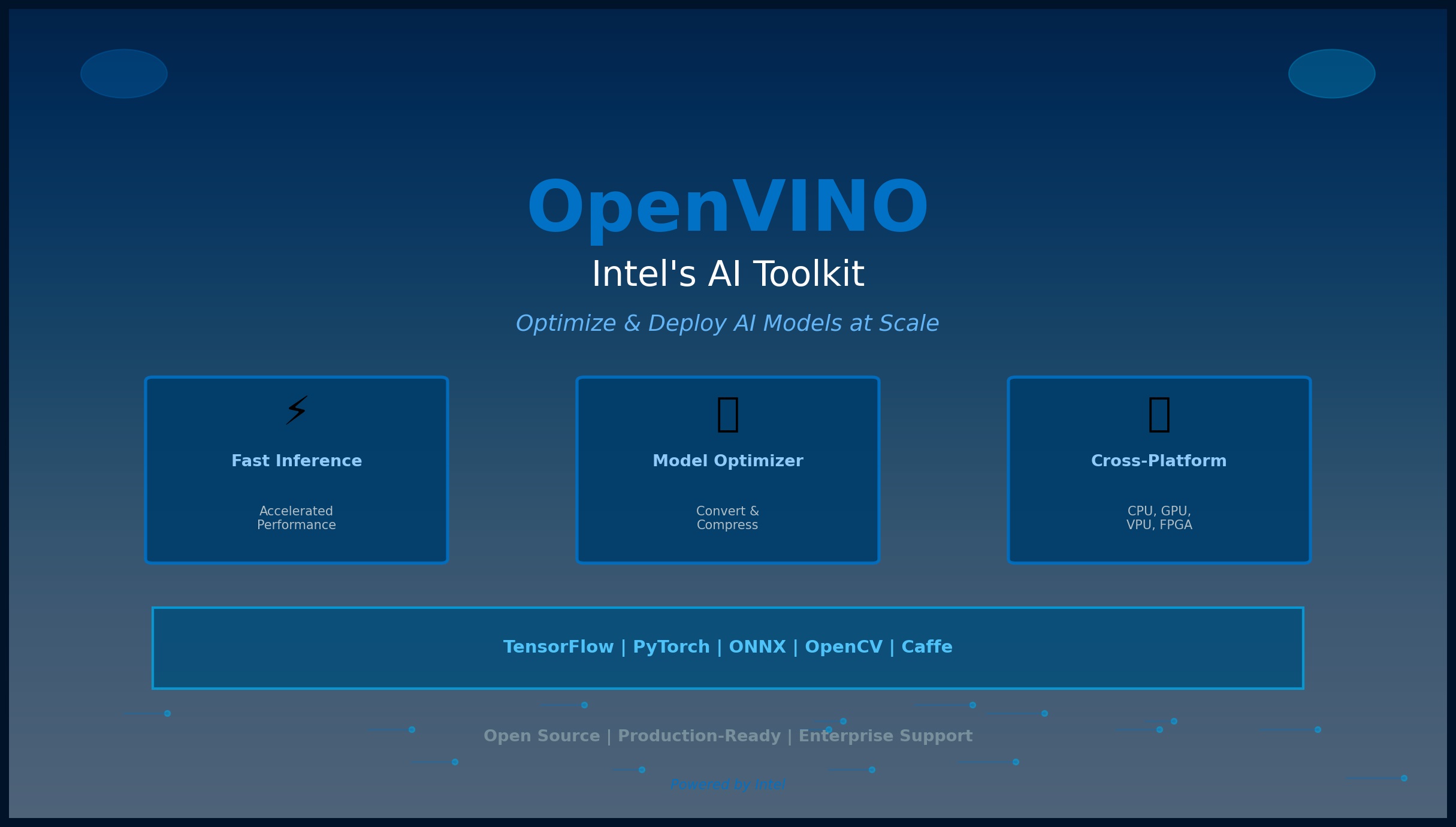

OpenVINO The Intel's AI Toolkit

Table of Contents

In the world of artificial intelligence, developing a powerful deep learning model is only half the battle. The real challenge often lies in deploying that model to run efficiently, quickly, and affordably on real-world hardware. This is where OpenVINO™ comes in.

If you've ever wondered how to make your AI applications blazing-fast on anything from a laptop to a smart camera, this guide is for you. We'll break down exactly what OpenVINO is, why it's a game-changer for AI deployment, and how it works.

The Core Definition: What is OpenVINO?

OpenVINO™ (Open Visual Inference & Neural Network Optimization) is a free, open-source toolkit developed by Intel. Its primary purpose is to optimize and accelerate AI inference across a wide range of Intel hardware.

In simpler terms, OpenVINO acts as a universal translator and performance booster for your trained AI models. It takes a model built in popular frameworks like TensorFlow, PyTorch, or scikit-learn and prepares it to run at maximum speed on Intel processors (CPUs), integrated graphics (iGPUs), vision processing units (VPUs), and FPGAs.

The key problem it solves is bridging the gap between AI development and AI deployment, especially for edge AI applications where performance and efficiency are critical.

Why is OpenVINO So Important? The Problem It Solves

A trained AI model can be computationally expensive. Running it directly on a target device can lead to high latency (slow responses), excessive power consumption, and poor hardware utilization. OpenVINO tackles these issues head-on with several key benefits:

- Blazing-Fast Inference Speed: OpenVINO's core strength is its ability to dramatically speed up AI inference. It uses advanced optimizations like graph fusion, quantization, and kernel tuning to squeeze every ounce of performance out of the underlying hardware.

- Maximized Hardware Utilization: It is specifically designed to harness the full power of the Intel hardware ecosystem. This means you can achieve high performance not just on dedicated GPUs, but also on standard CPUs that are already in millions of devices.

- Write Once, Deploy Everywhere: Developers can optimize their model once with OpenVINO and deploy it across different Intel devices without rewriting the code. This flexibility is invaluable for scaling applications from the edge to the cloud.

- Support for Heterogeneous Execution: OpenVINO can intelligently distribute workloads across different compute units on the same device. For example, it can run part of a model on the CPU and another part on the integrated GPU simultaneously for optimal performance.

How Does OpenVINO Work? The Key Components

The OpenVINO toolkit is built around a straightforward, two-step workflow that makes it easy to optimize AI models.

1. Model Optimizer

The first step is to convert your pre-trained model into a universal, highly optimized format called the Intermediate Representation (IR). The Model Optimizer is a Python-based command-line tool that:

- Takes models from popular frameworks (TensorFlow, PyTorch, ONNX, Keras, etc.).

- Strips away training-specific layers that are only needed for training.

- Fuses multiple operations into single, more efficient ones.

- Performs other optimizations to prepare the model for inference.

The output is a pair of files (.xml and .bin) that describe the model's architecture and its weights, respectively. This is the OpenVINO-ready model.

2. Inference Engine

Once you have the optimized IR model, the Inference Engine takes over. This is the C++-based runtime engine that loads the model and executes it efficiently on the target Intel hardware. It uses a "plugin" architecture, where each plugin is tailored for a specific type of hardware:

- CPU Plugin: Optimized for Intel CPUs, using instructions like AVX-512.

- GPU Plugin: Leverages the power of Intel integrated and discrete GPUs.

- VPU Plugin: Designed for ultra-low-power, high-throughput inference on Intel's Vision Processing Units (like the Myriad X VPU).

- FPGA Plugin: For customizable, high-performance acceleration on Intel FPGAs.

The Inference Engine abstracts the hardware complexity, allowing your application to request inference on any supported device with a simple API call.

3. Open Model Zoo (OMZ)

To accelerate development, OpenVINO provides the Open Model Zoo, a vast collection of free, pre-trained, and pre-optimized models for common tasks like:

- Image Classification

- Object Detection (e.g., YOLO, SSD)

- Facial Recognition

- Semantic Segmentation

- Human Pose Estimation

You can download these models and start building applications immediately, saving countless hours of training time.

Who Uses OpenVINO? (Real-World Applications)

OpenVINO is the engine behind many intelligent applications you encounter today. It's widely used in industries that require high-performance computer vision and AI at the edge:

- Smart Retail: For automated checkout systems, shopper analytics, and inventory management.

- Industrial Manufacturing (IIoT): For quality control, defect detection on production lines, and predictive maintenance.

- Smart Cities: For traffic flow analysis, license plate recognition, and public safety monitoring.

- Healthcare: To analyze medical images (X-rays, CT scans) and assist in diagnostics.

- Robotics & Drones: To provide machines with the "vision" needed to navigate and interact with their environment.

Conclusion: Is OpenVINO Right for You?

If you are a developer, data scientist, or engineer looking to deploy AI models on Intel hardware, the answer is a resounding yes. OpenVINO is an essential toolkit that demystifies the complex process of AI deployment.

By providing a streamlined workflow to optimize AI models and unlock the performance of Intel's diverse hardware portfolio, it empowers you to build faster, more efficient, and more scalable AI applications from the edge to the cloud.

Frequently Asked Questions (FAQ)

Q1: Is OpenVINO free to use? A: Yes, OpenVINO is completely free and open-source under the Apache License 2.0. You can download, use, and even contribute to its development without any licensing fees.

Q2: Can I use OpenVINO with non-Intel hardware (like AMD or NVIDIA)? A: While OpenVINO is primarily designed for and optimized on Intel hardware, the CPU plugin can run on any x86-compatible CPU, including AMD. However, you will not achieve the same level of performance and hardware-specific acceleration as you would on an Intel processor. It does not support NVIDIA GPUs.

Q3: What programming languages does OpenVINO support? A: The Model Optimizer is Python-based. The Inference Engine has a C++ API by default, which is wrapped by a full-featured Python API. This makes it accessible for both high-level Python scripting and performance-critical C++ applications.